How to integrate cutting edge technologies into a trusted digital lending space

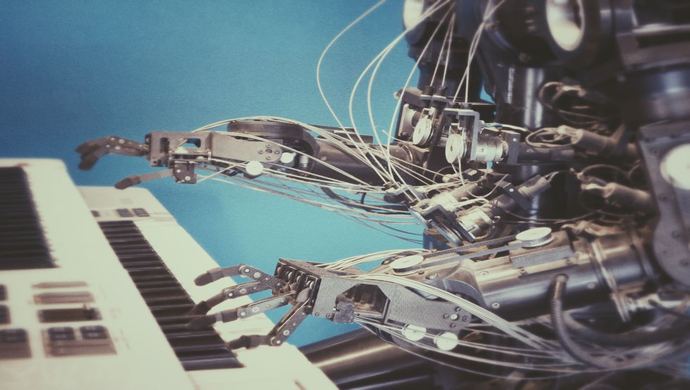

From fraud prevention to investment predictions and marketing, Machine Learning (ML) and Artificial Intelligence (AI) are recent cutting edge developments in the finance industry.

Particularly in the digital lending space, the next step to truly integrate these technologies is to build consumer trust in them.

The trust barrier facing machine intelligence

In the recent global study by Pegasystems, it was shown that only 35 per cent of survey respondents felt comfortable with AI.

More specific to the finance industry is HSBC’s Trust in Technology study, which found that only 7 per cent of respondents would trust an AI to open a bank account, and 11 per cent would trust an AI to dispense mortgage advice.

Notably, the dominant concern was that AI cannot understand our needs as well as another human being.

Also Read: AI, singularity, and machine learning explained in 5 minutes

There is a trust barrier, which is the challenge facing banks, traditional financial institutions and fin-techs such as digital lenders.

Overcoming the lack of empathy in AI

Be it opening a bank account or having loan applications screened by AI, consumers are uncomfortable with having a machine “in charge”, despite the fact that an AI could possibly reduce human bias and personal preferences in granting loans and approving deals.

First, digital lenders can overcome the lack of empathy in AI by educating their consumers about how their algorithm works and what the requirements are.

For example, exploring ways to increase algorithmic accountability, including the possibility of having algorithms reviewed by a regulatory board.

Over communicating how AI is deployed to screen applicants is crucial.

For example, by taking personal bias out of the equation, there are fewer chances for people to take advantage of personal connections to get a loan approved. With AI, applicants would be assessed based on their qualifications alone.

It is also important to be transparent and stringent in how funds are handled. For instance, P2P lending platforms like Validus do not keep investor funds in their own account.

Funds are held in escrow until they are disbursed to borrowers.

Digital lenders should emphasise the due diligence required for handling monies, especially given that they have less face-to-face interaction with customers.

Getting past the interpretability barrier in ML

The interpretability barrier is a long-standing issue in AI and ML. It refers to how machine thinking can yield accurate results, but lack the ability to explain them.

Also Read: It is official: Ola announces US$1.1B led by Tencent to add AI and Machine Learning capabilities

This is a constant source of frustration to consumers, who are disinclined to trust what they cannot understand.

To get past the interpretability barrier, it is important for digital lenders to simply explain what their AI cannot, especially when the AI processes many factors and data.

For example, to generalise complexities, the Credit Bureau of Singapore (CBS) can use its credit scoring system to explain which factors contribute to bad credit, even if exact numbers on how much impact different factors hold, cannot be disclosed.

Prove and acknowledge the criticality

Criticality refers to the degree of risk posed to the consumer should the AI make a mistake. The higher the degree of criticality, the more important it is to prove the AI’s accuracy.

Digital lenders must acknowledge that their AI has a higher degree of criticality than most consumer services like AIs tasked with recommending the next Netflix movie.

If a loan of US$300,000 is disbursed to a company that cannot repay it, the result is significant financial damage to a digital lender and its investors.

To prove and acknowledge criticality, digital lenders should constantly communicate the provability of its AI through benchmarking, repeated simulations and backtesting.

For example, the digital lender’s default rate compared to the top three banks should be included in regular reporting to investors.

Build confidence in ML and AI

Before ML and AI, financial institutions had to overcome significant distrust when algorithmic trading was first used by banks in the 80s and 90s.

By constantly communicating proof of the accuracy, explaining the concepts of the algorithm’s decision making, and exercising corporate responsibility, financial institutions have successfully normalised funds that are purely run by algorithms.

Digital lenders can have confidence that, by addressing the issues in a similarly proactive manner, they can eventually overcome the current trust barrier facing machine intelligence.

Also Read: “Nail it then scale it” – the new mantra for startups

–

Editor’s note: e27 publishes relevant guest contributions from the community. Share your honest opinions and expert knowledge by submitting your content here.

Join our e27 Telegram group here, or our e27 contributor Facebook page here.

Image Credit: Franck V.

The post Building trust in Machine Learning and AI in digital lending appeared first on e27.