A three-year-old Stacy sat with her dad beside her one beautiful Sunday morning. It was the one day where she got to interact and play with him. The rest of the week, John was busy experimenting with theories and building machine learning models that could have an impact on the world. Like an enthusiastic toddler, Stacy would get up early on Sunday mornings to do all her favourite things with her father. One of them included building Lego structures.

This Sunday was different than the rest because it was Stacy’s birthday and John had her brought her a brand new Lego set with unique characters. As Stacy and Jon sat in the backyard early morning, John gave her the present.

An excited Stacy immediately unwrapped the Lego box and took out the pieces. Just like every other time, she started building it in an upward direction. Stacy loved building towers, traffic lights, and skyscrapers and was going to do the same this day.

The only difference being she would now make the all-new Lego character sit on top of those structures. But, that’s when John interrupted and suggested building it sideways.

Also Read: How Chinas Greater Bay Area initiative will create a testbed for AI and decentralised tech industry

Could Stacy build the Lego sideways or in any other direction, because she had only been building it upwards? Five minutes later, Stacy starts sticking tapes to the wall. Its fifteen minutes now and little Stacy exclaims, Here Dad! Batman is now sitting on my Lego train track that enters the wall!

A machine inspired experiment

John’s attempt to encourage Stacy is an example of an experiment. Stacy could easily apply her knowledge and experience of building Legos upwards to building them sideways. And all this she could do within minutes. What Stacy demonstrated can be called as mere common sense, which even the most advanced computers of today’s generation fail to display.

Unlike Stacy, who could quickly learn to apply her existing knowledge to a new context, modern artificially intelligent systems still find such a case hard to reproduce. Like John, many other scientists and experts believe that artificial intelligence could learn from babies. While this could open many possibilities for the future, it could also impact the world in ways we can’t even imagine.

Scientists in the past have tried feeding direct knowledge to computers to create artificial intelligence. However, since that approach failed miserably, we now have machine learning to the rescue.

Today’s machine learning techniques enable the computer to learn on their own by figuring out what to do upon looking at a large dataset. Researchers from all across the world claim that these machine learning models can be trained to learn almost anything and everything. It even includes one of the human’s most prized possesions-common sense.

The idea of common sense

But it seems like these researchers are ignoring decades of scientific work in the field of cognitive science and developmental psychology that demonstrate that humans have some innate abilities. The inherent capabilities of humans are nothing but programmed instincts that appear in a child as they are born and grow up. These help us think abstractly, clearly and fundamentally attribute to what we call common sense.

For a more precise distinction, our machine learning models today rely on a large number of data sets to produce accurate results. Even the meta-learning models that learn only from a limited number of data sets need a few hundred for the task. But, the underlying question is, do children learn in this manner? Did John show Stacy a thousand cases of building a Lego sideways before she could make it?

In another instance, let’s take a much simpler problem, where a child learns to identify objects around them. Do we need to show a child a few thousand apples, before they recognize it as one? The answer is no, and it sounds radically mindless when we apply machine learning methods to human beings. The answer to why such practices are inapplicable to even the most underdeveloped human brains lies in our innate abilities.

Artificial Intelligence researchers ought to bring these qualities and instincts of a child’s learning to complex machines. However, most systems that are riding high on machine learning’s success seldom find this of importance. Computer scientists appreciate the simplicity and one of their goals involves reducing the debugging of complex Java development code.

Also Read: How Chinas Greater Bay Area initiative will create a testbed for AI and decentralised tech industry

Josh Tenenbaum, a psychologist at the Massachusetts Institute of Technology (MIT) in Cambridge, says that big companies like Facebook and Google are another reason why artificial intelligence has reached its limits and is being further pushed in that direction. These companies are merely interested in solving short term problems using machine learning.

Some of these are facial recognition and the web search that can be done by training a model on a vast number of data sets. Since such models work remarkably well, there seems no need for exploring an intelligence that is innate like a child.

The way machines learn

However, there is no doubt that the existing techniques have led to some out of the ordinary breakthroughs. Today, you can give a machine some millions of pictures of animals and label them like a cat or a dog. Even in the absence of any further information about the characteristics of cats and dogs, the machine will be able to classify new examples of cats and dogs. To achieve this, the machine learning models abstract some statistical patterns from the pictures and then use them for classification.

Similarly, a machine called Google’s Deep Mind’s Alpha Zero can be trained to play a game of Chess or a video game right from scratch. The logic behind it is simple. When a computer performs a game, it gets a score. Over time, as it keeps on playing millions of such games, it learns to maximize its score. Alpha Zero has also been able to beat IBM’s Deep Blue, another trained model, at Chess. The surprise comes when these models don’t even understand the mechanics of the game and go on finding statistical patterns to increase their learning. In other words, they are not intelligently learning but learning from their experiences.

In spite of being a great use, the problem is that these algorithms have developed a limitation. The more you feed them with data, the better they will learn. But when it comes to generalizing from all this data, they fail miserably.

Imagine this in the context of babies. They don’t need millions of samples to learn. They learn much more generally and accumulate a more robust kind of knowledge when compared to artificially intelligent machines. For researchers, the future of artificial intelligence lies in unravelling the mystery behind the way babies learn, and the way devices can implement it.

Thinking about the big picture or the future, scientists need to develop AI so that it can solve many fierce problems involving common sense and flexibility than today. If we want to imagine a world with autonomous cars that can run in chaotic traffic, or bots that explain the news to a reader, we need a build AIs to solve problems in a more generalized environment.

However, with some research going on in the world, there is some hope left for the future. Massachusetts Institute of Technology (MIT) recently launched a research initiative called Intelligent Quest to understand human intelligence in terms of engineering. The initiative is already raising millions of dollars by now and in some ways, trying to answer a similar issue like that of nature versus nurture in learning theories.

Even the Defense Advanced Research Projects Agency is working on a project called the Machine Common Sense to understand how babies and young children learn. As astonishing as it sounds, the government research lab that helped invent the Internet and the computer, is now partnering with child psychologists and computer scientists for the task.

A different approach to learning

There might be evidence for developing a system with childlike learning capabilities because many credible organizations across the world are investing in such research. But there are problems with machines that we seek to solve. Human babies, the smartest among all other species, learn by the trial and error method. Apart from this, cognitive development scientists say that as babies, we are born with some basic instincts that help us quickly gain a flexible common sense.

For machines, it has been challenging because we haven’t since any conceptual breakthroughs in machine learning since the 1980s. Most of the popular machine learning algorithms came into the picture back then. To date, all we have seen in the name of advancements is ever-expanding sets of data that are being used to train machine learning models on a large scale.

Young children, on the other hand, learn differently from the machines. The kind of data that the machine learns from are generally curated by the people and of good quality and clear category.

You won’t find people posting blurry pictures of themselves. All they try is to display the best shot. Similarly, games like Chess are defined by people to work within a fixed range of possibilities and under specific rules. But when it comes to children, it is the opposite of clarity.

Ongoing research at Stanford University suggests that babies see a series of chaotic and poorly filmed videos that consist of a few familiar things such as toys, parents, dogs, food, etc. These move around at odd angles and are the opposite of millions of clear photographs present in an internet data set.

Another factor comes into play when machines learn. It is called ‘Supervision’. Machines need to be told what they’re learning. When images are annotated, they are given particular labels. Similarly, when machines play games, each of their moves is scored. All this helps the machine see what it exactly needs to learn.

The data for children is, however, largely unsupervised. Parents do tell their babies notions such as ‘good job’, ‘danger’ or tell them what animal is given in a picture. But it is mostly to keep them safe and sound. A large part of the baby’s learning is spontaneous and motivated by one’s self.

Also Read: Today’s top tech news: Tourplus raises US$400K, developer school 42 launches in Malaysia

Even if we provide large data sets of data to a machine, they cannot figure out the same kind of generalizations as children do. Their knowledge can be considered shallow, and they can be fooled very easily with what is known as adversarial examples. For example, if you give an AI an image that has jumbled pixels, it will most probably classify it as a cat if the pixels fit the right statistical pattern of its learning. However, a child will seldom make that mistake.

In a similar instance, advanced AI models such as Alpha that we have today, do not imply common sense in their learning. If an AI learned the game Go on a standard 19 * 19 board, it wouldn’t be able to demonstrate the same playing skills on a 21 * 21 board. Instead, the AI would have to learn the game anew. Scientists have also tested this theory with the domain of odd and even numbers. A network was trained to take input as an even number and simply spit it out. However, when the same number was tested with odd numbers, it immensely faltered. This isn’t the case with a child.

Conclusion

AI as a program keeps an eye on the learner and dictate them whether they are right or wrong at every step of the way. This is quite unlike human children, who under helicopter parenting might be able to do a designated task well but fail in critical matters such as creativity and resiliency.

Alter the problem even with the smallest degree, and they will have to learn all over again. That’s the case with machines, and it is exactly how they differ from human children.

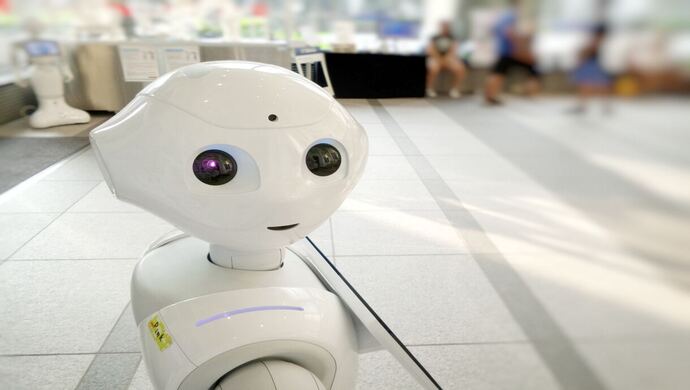

No doubt we are far away from approaching a human-like intelligence level in machines. While this might not be our sole purpose, we still want an AI like C3PO that can make us even smarter. Our only solution to achieve a desirable AI is to take cues from babies and create more curious AIs than obedient ones.

–

Editor’s note: e27 publishes relevant guest contributions from the community. Share your honest opinions and expert knowledge by submitting your content here.

Join our e27 Telegram group here, or our e27 contributor Facebook page here.

Image Credit: Franck V

The post How learning like babies can be the future of AI? appeared first on e27.